- #If you delete ivolume does the volume revert update#

- #If you delete ivolume does the volume revert windows#

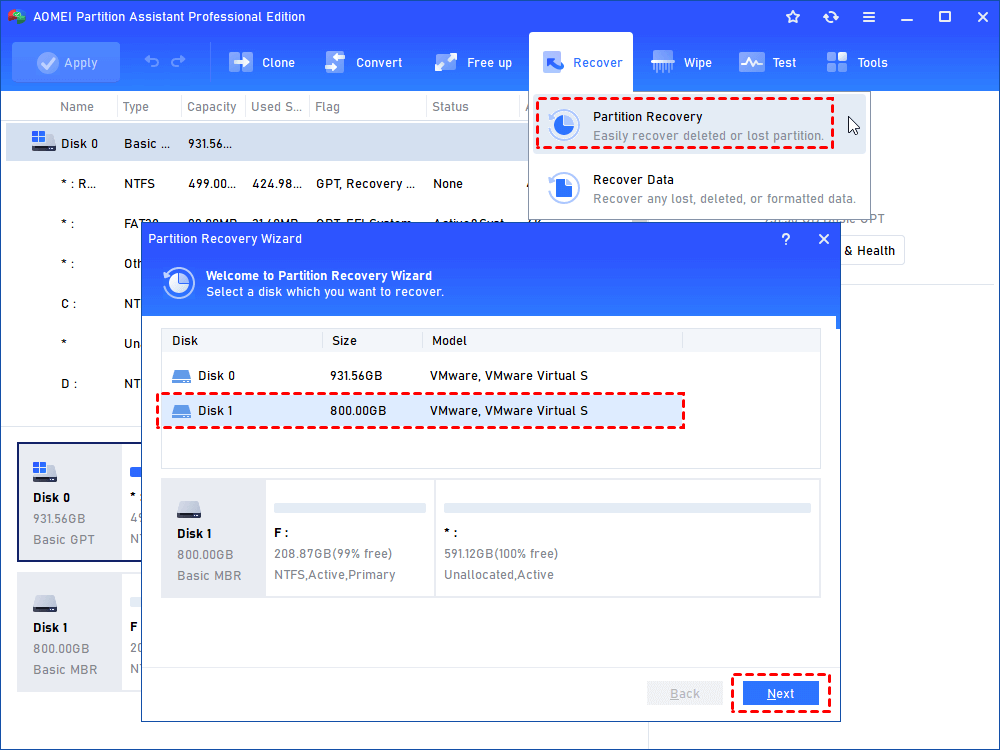

Check the status of the PV and it should now show "Available". Save the changes and close the editor.The lines to remove should look more or less like this: Remove all of the lines from (and including) "claimRef:" until the next tag with the same indentation level. In the editor, remove the entire "claimRef" section.Once that's finished, your PVC will get removed and the PV (and the disk it references!) will be left intact. Shutdown your pod/statefulset (and don't allow it to restart).Verify that the status of the PV is now Retain.

#If you delete ivolume does the volume revert windows#

#If you delete ivolume does the volume revert update#

Now just update your volume claim to target a specific volume, the last line of the yaml file: -Įdit: This only applies if you deleted the PVC and not the PV. Recreate the volume manually with the data from the disk:. Use the snapshot to create a disk: gcloud compute disks create name-of-disk -size=10 -source-snapshot=name-of-snapshot -type=pd-standard -zone=your-zoneĪt this point, stop the services using the volume and delete the volume and volume claim. In GKE console, go to Compute Engine -> Disks and find your volume there (use kubectl get pv | grep pvc-name) and create a snapshot of your volume. Once you are in the situation lke the OP, the first thing you want to do is to create a snapshot of your PersistentVolumes. The steps we took to remedy our broken state: Your PersistenVolumes will not be terminated while there is a pod, deployment or to be more specific - a PersistentVolumeClaim using it. We had the same problem and I will post our solution here in case somebody else has an issue like this. We have done so on GKE, not sure about AWS or Azure but I guess that they are similar It is, in fact, possible to save data from your PersistentVolume with Status: Terminating and RetainPolicy set to default (delete).

0 kommentar(er)

0 kommentar(er)